WER we are and WER we think we are

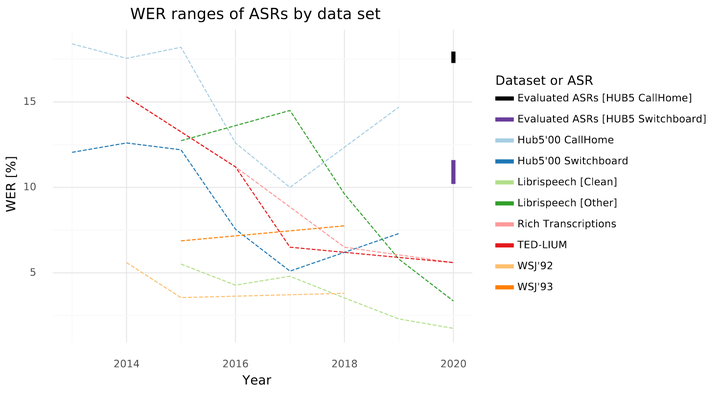

WER ranges in ASR systems. Reference values taken from the Wer are we report \cite{synnaeve_2020} and the \textit{Papers with Code} website \cite{papers_with_code} for ASR solutions published in the last 5 years. Outliers were removed for the sake of figure readability.

WER ranges in ASR systems. Reference values taken from the Wer are we report \cite{synnaeve_2020} and the \textit{Papers with Code} website \cite{papers_with_code} for ASR solutions published in the last 5 years. Outliers were removed for the sake of figure readability.Abstract

Natural language processing of conversational speech requires the availability of high-quality transcripts. In this paper, we express our skepticism towards the recent reports of very low Word Error Rates (WERs) achieved by modern Automatic Speech Recognition (ASR) systems on benchmark datasets. We outline several problems with popular benchmarks and compare three state-of-the-art commercial ASR systems on an internal dataset of real-life spontaneous human conversations and HUB'05 public benchmark. We show that WERs are significantly higher than the best reported results. We formulate a set of guidelines which may aid in the creation of real-life, multi-domain datasets with high quality annotations for training and testing of robust ASR systems.